The experimentation phase is ending. After three years of pilots, proofs of concept, and cautious testing, enterprises are preparing to make consequential decisions about artificial intelligence in 2026. The question is no longer whether to adopt AI but how to scale it strategically and which bets to make as the technology evolves faster than most organizations can absorb.

The data points toward a pivotal year. According to Anthropic's 2026 State of AI Agents Report, 80 percent of organizations say their AI agent investments are already delivering measurable economic returns. Not projected value or pilot results, actual ROI. And 81 percent plan to tackle more complex use cases in the coming year, moving beyond simple task automation toward multi-step processes and cross-functional deployments.

Yet significant obstacles remain. An MIT survey found that 95 percent of enterprises still see no meaningful return on their AI investments, even as adoption doubles. The gap between AI's promise and its realized value continues to define the market, and closing that gap will determine which organizations pull ahead in 2026.

What follows are the ten trends most likely to shape enterprise AI over the next twelve months, drawn from research, investor perspectives, and the emerging patterns visible in how leading organizations are deploying the technology.

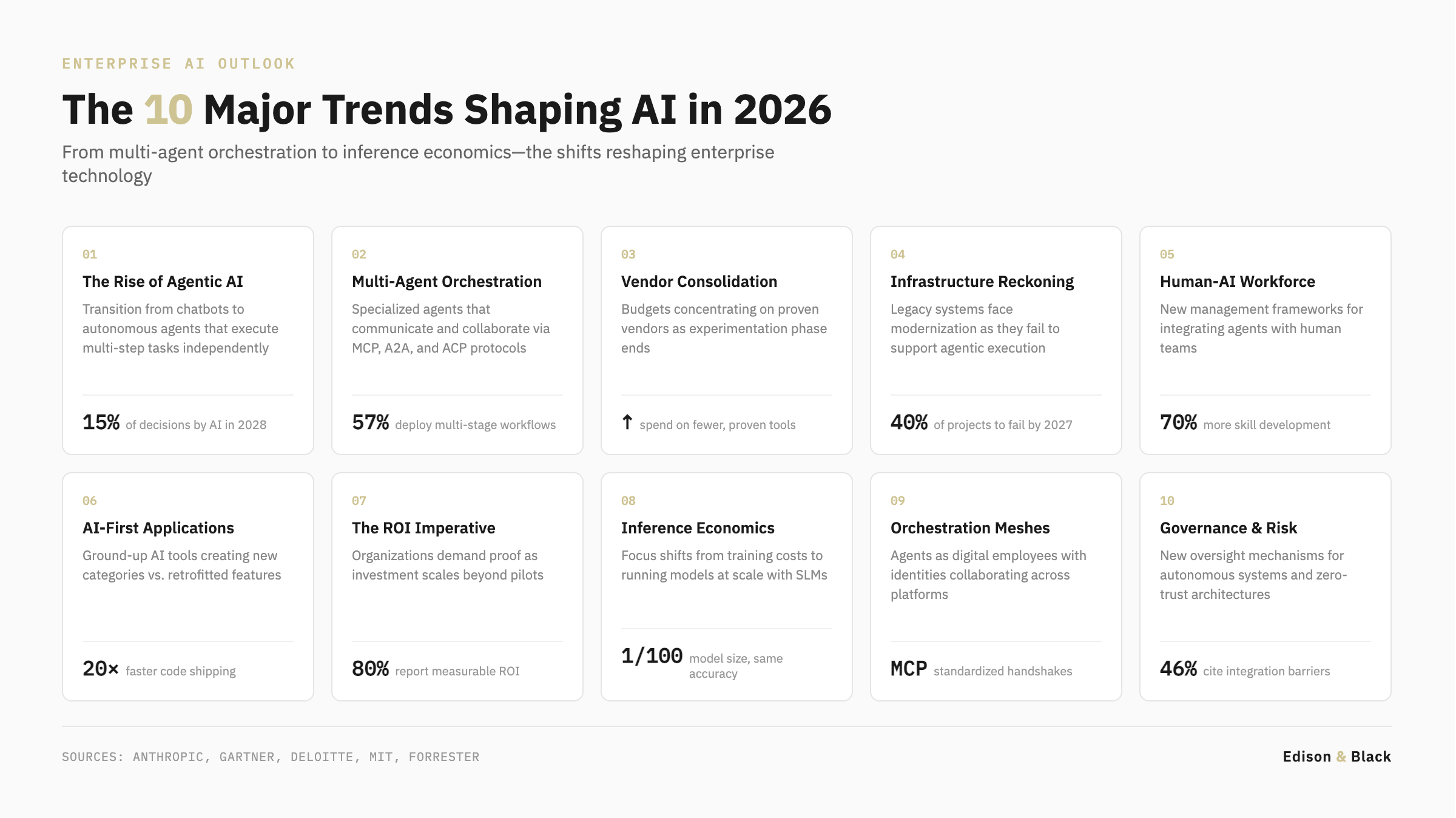

1. The Rise of Agentic AI

The most significant shift in enterprise AI is the transition from chatbots to agents, systems that don't just answer questions but autonomously execute multi-step tasks across business processes.

This is not a marginal upgrade. Agents represent a fundamentally different paradigm. Where traditional AI tools wait for human input at each step, agents reason through problems, make decisions, and take action independently. They can conduct market research, write and debug code, analyze datasets, and coordinate workflows that span multiple systems and teams.

The momentum is striking. Gartner predicts that 15 percent of day-to-day work decisions will be made autonomously through agentic AI by 2028, up from essentially none in 2024. Meanwhile, 33 percent of enterprise software applications will include agentic AI by that timeframe, compared with less than 1 percent today.

Meta's recent $2 billion acquisition of Manus, a startup known for general-purpose AI agents, signals how seriously large technology companies are taking this shift. The deal, reportedly Meta's third-largest acquisition ever, reflects the intense competition to secure high-performing AI talent and technology capable of handling end-to-end user requests.

According to Anthropic's research, more than half of organizations (57 percent) now deploy agents for multi-stage workflows, with 16 percent progressing to cross-functional processes spanning multiple teams. In 2026, 39 percent expect to develop agents that handle multi-step processes, while 29 percent plan to deploy them for cross-functional projects.

The implication is clear: organizations that master agentic deployments can unlock advantages in speed, consistency, and scale that simple automation cannot match. This is where AI moves from incremental efficiency gains to enabling entirely new ways of working.

2. Multi-Agent Orchestration Becomes the New Architecture

The first wave of enterprise AI involved deploying individual agents for specific tasks. The next wave involves orchestrating multiple specialized agents that communicate, collaborate, and hand off work to accomplish complex business outcomes.

This represents a fundamental architectural shift. Rather than building monolithic AI solutions that attempt to handle everything, leading organizations are adopting what experts describe as a "microservices approach to AI," deploying numerous smaller, specialized agents across various platforms closer to where workflow instructions and data reside.

The advantages are substantial. Smaller agents are easier to debug, test, and maintain. Multiple specialists can be combined dynamically for complex tasks. And the approach offers platform flexibility, allowing agents to run on different systems while maintaining interoperability.

Think of it as the difference between hiring one generalist employee to handle all customer operations versus assembling a team of specialists, each expert in their domain, who coordinate seamlessly. A customer inquiry might flow from a triage agent to a technical specialist to a billing expert to a satisfaction monitor, with each handoff invisible to the customer but precisely orchestrated behind the scenes.

Enabling this coordination requires new protocols that allow agents to discover each other, delegate tasks, and share context. Three frameworks are emerging as foundational infrastructure:

Model Context Protocol (MCP), developed by Anthropic, standardizes how AI systems connect to data sources and tools, providing a universal interface for agents to access enterprise resources. Agent-to-Agent Protocol (A2A), from Google, enables direct communication between different AI agents across platforms, handling agent discovery, task delegation, and collaborative workflows. Agent Communication Protocol (ACP) offers an open standard allowing agents to collaborate regardless of the environment in which they were built.

These protocols represent the foundational layer for the next generation of enterprise AI. Organizations that build on them now will find it dramatically easier to scale agent deployments and create workflows that would be impossible with isolated tools.

Deloitte's analysis frames this evolution in historical terms: "Henry Ford put it perfectly: 'Many people are busy trying to find better ways of doing things that should not have to be done at all.' He was writing about building automobiles in 1922, but he could just as easily have been describing enterprise AI in 2025."

The insight applies directly to multi-agent architecture. Organizations attempting to automate existing processes with single agents often discover the processes themselves need redesign. Multi-agent orchestration forces this rethinking by requiring clear handoff points, defined responsibilities, and explicit coordination, the same discipline that improves human workflows.

At Toyota, teams are using orchestrated agents to gain visibility into vehicle delivery timelines. The process once involved 50 to 100 mainframe screens and significant hands-on work. Now, coordinated agents deliver real-time information from pre-manufacturing through dealership delivery, with plans to empower agents to identify delays and draft resolution emails before human workers begin their day.

3. Vendor Consolidation and Budget Concentration

The era of unlimited AI experimentation is closing. Enterprises have spent the past two years testing multiple tools for single use cases, exploring overlapping solutions, and maintaining sprawling portfolios of AI vendors. That approach is becoming untenable.

A recent TechCrunch survey of 24 enterprise-focused venture capitalists found overwhelming consensus: enterprises will increase their AI budgets in 2026, but spending will concentrate on a narrow set of vendors that deliver proven results.

"Today, enterprises are testing multiple tools for a single use case, and there's an explosion of startups focused on certain buying centers where it's extremely hard to discern differentiation," said Andrew Ferguson, vice president at Databricks Ventures. "As enterprises see real proof points from AI, they'll cut out some of the experimentation budget, rationalize overlapping tools, and deploy those savings into the AI technologies that have delivered."

The bifurcation will be stark. A small number of vendors will capture a disproportionate share of enterprise AI budgets while many others see revenue flatten or contract. For AI startups, this creates an existential reckoning. Companies operating hard-to-replicate products, vertical solutions built on proprietary data, will likely continue growing. Startups with offerings similar to those from large enterprise suppliers may see pilot projects and funding evaporate.

This consolidation extends to the foundational model layer as well. ChatGPT, Claude, Gemini, Copilot, and Perplexity compete on model size, speed, and integrations, but for most users, the value proposition has become functionally interchangeable. As one analysis noted, this layer is rapidly becoming infrastructure, like the cloud wars between AWS, Azure, and Google Cloud, where features flatten out, prices drop, and users stop caring which engine powers the system as long as it works.

4. The Infrastructure and Integration Reckoning

Most enterprises ready to implement AI agents discover the same uncomfortable truth: their existing systems were not designed for agentic interactions.

Legacy applications lack the real-time execution capability, modern APIs, modular architectures, and secure identity management needed for true agentic integration. Current data architectures, built around extract-transform-load processes and data warehouses, create friction that limits what agents can accomplish. Gartner predicts that over 40 percent of agentic AI projects will fail by 2027 because legacy systems cannot support modern AI execution demands.

Deloitte's 2025 Emerging Technology Trends study quantifies the gap: while 30 percent of organizations are exploring agentic options and 38 percent are piloting solutions, only 14 percent have solutions ready to deploy and a mere 11 percent are actively using these systems in production. Furthermore, 42 percent of organizations report they are still developing their agentic strategy road map, with 35 percent having no formal strategy at all.

The integration challenge tops the list of implementation barriers. Nearly half of organizations (46 percent) cite integration with existing systems as a primary obstacle, while 42 percent point to data access and quality issues.

The solution involves what experts describe as a paradigm shift from traditional data pipelines to enterprise search and indexing, contextualizing enterprise data through knowledge graphs and making information discoverable without requiring extensive ETL processes. Organizations that invest in this foundational work will find agentic deployment dramatically easier; those that attempt to layer agents onto broken processes will continue to struggle.

5. The Emergence of Human-AI Workforce Management

As agents mature within job functions, organizations are beginning to recognize that AI represents a new form of labor, one that may require management frameworks as sophisticated as those developed for human workers.

This is not merely metaphorical. Leading enterprises are exploring how to integrate agents with their human workforce in ways that leverage the unique strengths of each. Agents excel at defined processes and can operate continuously without breaks. Humans remain essential for navigating shifting business requirements, complex problem-solving, and the kind of judgment that emerges from experience.

Harvard Business School faculty describe this as the need for "change fitness", the organizational capacity to metabolize significant and ongoing change. At the individual level, this means curiosity, experimentation, and comfort working in human-machine workflows. At the team level, it requires new collaboration patterns and decision rights that match an AI-driven context.

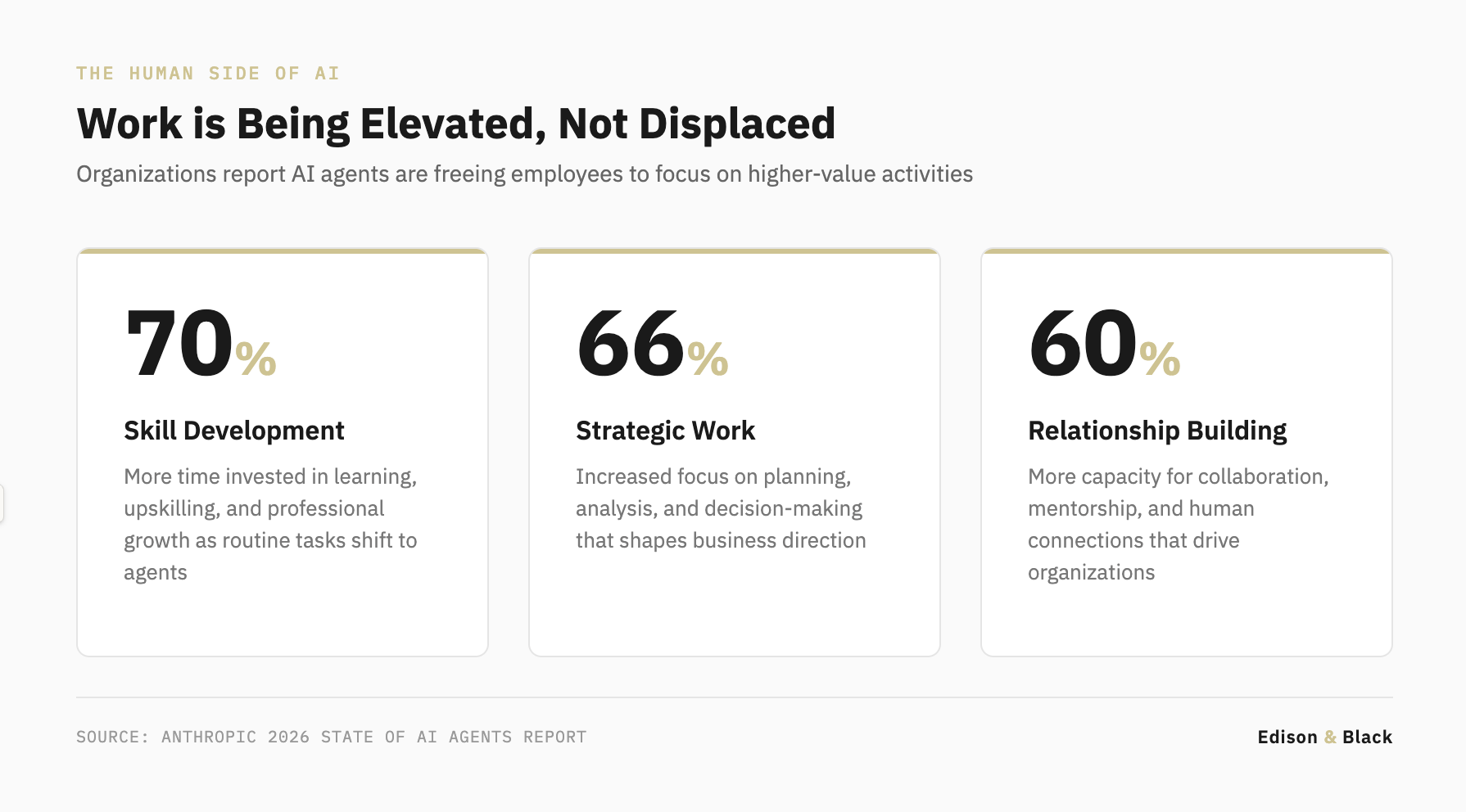

According to Anthropic's research, agents are already shifting how employees spend their time. Organizations report increased focus on strategic work (66 percent), relationship building (60 percent), and skill development (70 percent) rather than routine execution. The data suggests agents are elevating work rather than simply displacing it, handling execution while humans focus on judgment, relationships, and learning.

Some organizations are going further. Biotech company Moderna recently named its first chief people and digital technology officer, combining technology and HR functions to integrate workforce planning with technology planning. The insight: organizations need to think about work planning regardless of whether a person or a technology performs the task.

Specialized AI recruiting agencies are already helping organizations navigate this transition, identifying talent with the AI fluency and adaptability required to thrive in hybrid human-agent environments. The demand for workers who can direct AI, evaluate its outputs, and integrate its capabilities into human workflows is growing faster than nearly any other skill category.

Geoffrey Hinton, the Nobel Prize-winning computer scientist known as the "godfather of AI," offered a stark prediction for the year ahead: AI's progression means that after roughly every seven months, it can complete tasks in half the time. On a coding project, AI can now accomplish in minutes what used to take an hour. Within a few years, it will perform software engineering tasks that currently require a month of labor.

The workforce implications are profound. Organizations must develop frameworks for onboarding agents (training them on enterprise-specific data while educating human supervisors), managing agent performance (tracking productivity and quality at scale), and handling agent life cycles (updates, redeployment, and retirement). The companies that figure out human-AI collaboration will build sustainable advantages over those focused solely on automation.

6. AI-First Applications Versus Retrofitted Software

A crucial distinction is emerging between two categories of AI-powered software: legacy applications with new AI features bolted on, and applications that could not exist without AI at their core.

The fastest-growing category of AI adoption right now involves existing software tools adding AI features to stay relevant. Microsoft has embedded Copilot across Word, Excel, and Teams. Canva has Magic Studio for generating and editing images. Zoom summarizes meetings. These upgrades reduce friction and save time, but they don't fundamentally change how teams operate. The core function of the product, writing, editing, tracking, designing, remains the same.

AI-first applications represent something different. These tools are designed from the ground up to leverage large language models or intelligent agents as their core engine. They open entirely new categories of software and often monetize differently, through usage credits, API access, or rate limiting rather than traditional subscription models.

This distinction matters for competitive positioning. Retrofitted AI features become table stakes rapidly; if one application adds them, competitors follow within months. AI-first applications, by contrast, can create defensible advantages by enabling workflows that were previously impossible.

The barriers to building AI-first applications have collapsed. What once required months of coding can now be accomplished in days using development platforms that let anyone with minimal technical knowledge create fully functional applications. Web development startup Lovable, for instance, now ships code 20 times faster than writing it manually using agentic coding tools. This democratization will accelerate in 2026, with small teams and solo builders shipping solutions that challenge legacy vendors.

7. The ROI Imperative and Measurement Maturity

For three years, enterprises have been promised that AI adoption would deliver transformative returns. In 2026, organizations will demand proof.

The early evidence is encouraging. Anthropic's research found that 80 percent of organizations report their AI agent investments are already delivering measurable economic impact, with confidence even higher looking forward, 88 percent expect continued or increased returns. The question facing leaders is no longer whether to invest but how to scale what's working.

Organizations expect AI agents to deliver efficiency gains over the next twelve months, with 44 percent anticipating faster task completion. Enterprises also anticipate cost savings from their agent deployments, particularly as they reach scale.

The highest-impact use cases beyond coding include data analysis and report generation (60 percent cite this as among the most impactful applications) and internal process automation (48 percent). These functions share characteristics that make them ideal proving grounds: high-volume repetitive work, fast iteration cycles, and clear performance metrics that make ROI measurable.

Software development (57 percent) and customer service (55 percent) are expected to see the greatest near-term impact, with marketing and sales (46 percent) and supply chain operations (44 percent) close behind. The proximity in expected impact across these functions suggests multiple viable entry points rather than one dominant use case.

As agents operate continuously, cost management becomes critical. Poorly configured agent interactions can trigger cascading actions, unpredictable resource consumption, and ballooning expenses. Organizations need specialized financial operations frameworks, FinOps for agents, to monitor and control costs, accounting for token-based pricing models that differ fundamentally from traditional software licensing.

8. The Shift Toward Inference Economics

As the initial gold rush for training models subsides, 2026 is seeing a fundamental pivot toward Inference Economics. For years, the strategic focus was on the massive capital expenditure required to train "frontier" models. Now, the battleground has shifted to the cost and latency of running those models at scale.

Enterprises are moving away from using a single, monolithic LLM for every query. Instead, they are deploying "Small Language Models" (SLMs) and Domain-Specific Models that are 1/100th the size but fine-tuned for high-accuracy, low-cost execution. This shift allows for "edge reasoning," where AI processing happens locally on devices or private servers rather than in expensive cloud clusters. By 2026, the maturity of these smaller architectures means that the cost of an "intelligent unit of work" has plummeted, making it viable to embed AI into billions of low-stakes micro-interactions that were previously too expensive to automate.

9. From Silos to Agentic Orchestration Meshes

The most advanced organizations have moved past deploying isolated bots and are now building Agentic Orchestration Meshes. In this model, agents are no longer just tools; they are treated as digital employees with specific identities and roles that must collaborate across different platforms and vendors.

The emergence of the Model Context Protocol (MCP) has been the catalyst for this change. It provides a standardized "handshake" that allows a customer service agent from one vendor to securely pass context to a logistics agent from another. This prevents the "agent silo" problem where AI systems become islands of automation. In 2026, the competitive advantage lies not in the intelligence of a single agent, but in the efficiency of the "mesh"—the connective tissue that ensures data, authority, and intent are preserved as tasks move through a multi-agent ecosystem.

10. The Governance and Risk Management Challenge

As agents gain autonomy, organizations must establish oversight mechanisms for systems designed to operate independently. Traditional IT governance models don't account for AI that makes decisions and takes actions without human approval at each step.

The risk landscape is evolving. One emerging concern involves "workslop," AI-generated content that appears polished but lacks substance, shifting cognitive burden downstream and creating more work for recipients. Poorly designed agentic applications can actually reduce efficiency rather than improve it, making organizations less productive despite their AI investments.

Beyond quality concerns, security and compliance requirements intensify as agents handle sensitive data and make consequential decisions. Organizations must implement zero-trust architectures with ephemeral authentication systems that continuously verify and authorize agent actions. They need immutable logs for every agent decision, cryptographic receipts for transactions, and clear audit trails establishing under whose authority agents acted.

The autonomy spectrum matters here. Organizations must define clear boundaries for agent decision-making through graduated autonomy levels with appropriate human oversight triggers. The progression moves from augmentation (agents enhancing human capabilities) through automation (agents handling tasks within human-defined processes) toward eventual autonomy (agents working with minimal oversight).

Success requires deploying what experts call "agent supervisors," humans who enter workflows at intentionally designed points to handle exceptions requiring judgment. This is not about checking agents' work but about strategic handoffs at critical decision points. As AI technology improves, organizations should continually assess capabilities to ensure they are delegating responsibilities that agents can handle reliably.

The regulatory environment adds another layer of complexity. Meta's Manus acquisition, for instance, faces scrutiny because of the startup's Chinese origins, reflecting broader concerns about AI security and cross-border technology transfers. Organizations deploying agents must navigate an evolving landscape of AI governance requirements that varies by jurisdiction and industry.

The Path Forward

The patterns emerging for 2026 are clear. Platforms are converging as foundational models become interchangeable. Multi-agent architectures are replacing monolithic solutions. Applications are embedding AI as existing software adds features to remain competitive. Builders are rising as barriers to creating AI-first applications collapse. And workflows are being reimagined as organizations recognize that agents require process redesign, not just technology deployment.

Yet adoption across industries, particularly in non-tech sectors, will take time. Teams need to change how they work. Ethical, legal, and data concerns must be addressed. And perhaps the biggest barrier: people need time to learn and experiment.

The analogy to the early internet is instructive. Amazon was founded in 1994 and did not turn a full-year profit until 2003, nine years later. It took decades for infrastructure, applications, and business models to mature. AI will move faster, but the evolution will follow similar patterns.

The organizations that thrive in 2026 will be those that move beyond viewing AI as a technology initiative and begin treating it as a transformation of work itself. They will redesign processes rather than automate existing ones. They will build governance frameworks that match the autonomy they grant to agents. They will invest in multi-agent architectures that can scale and adapt. And they will develop the human capabilities, change fitness, AI fluency, strategic judgment, that remain essential even as machines take on more execution.

AI is no longer the product. It is an ingredient. The real strategic advantage comes from how organizations apply it, not which model they choose, but what they build with it.

Get in Touch

Send An Email Connect With Us